publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2024

- Telematic music transmission, resistance and touchInternational Journal of Performance Arts and Digital Media, 2024

This article retraces the history of telematic performance from early videophone experiments by the Electronic Café in the 1980s, through a series of ambitious digital art installations at museums like the Centre Pompidou. Onto this history, I map a series of netmusic projects I initiated in this period, from ISDN performances at the Sonar Festival to my installation Global String at Ars Electronica. This sets the context for collaborative online performances held during the COVID pandemic with artists like Paul Sermon and the Chicks on Speed. I finish by describing the Hybrid Live project connecting Goldsmiths and Iklectik Art Labs in London with Stanford University’s CCRMA and SFJazz in California. I describe the low latency audio transport used, the importance of audiovisual synchronisation and the computer vision abstractions resulting in a London-New York remote dance performance. By situating current work in these histories, and closely examining the qualities of the network necessary for the transmission of a sense of embodied experience – and therefore trust – we understand that network performance occurs in its own space, one distinct from physical co-presence.

@article{Telematic, author = {Tanaka, Atau}, title = {Telematic music transmission, resistance and touch}, journal = {International Journal of Performance Arts and Digital Media}, volume = {0}, number = {0}, pages = {1-19}, year = {2024}, publisher = {Routledge}, doi = {10.1080/14794713.2024.2329836}, url = {https://doi.org/10.1080/14794713.2024.2329836}, eprint = {https://doi.org/10.1080/14794713.2024.2329836}, }

2023

- An End-to-End Musical Instrument System That Translates Electromyogram Biosignals to Synthesized SoundAtau Tanaka, Federico Visi, Balandino Di Donato, Martin Klang, and Michael ZbyszyńskiComputer Music Journal, Jun 2023

This article presents a custom system combining hardware and software that senses physiological signals of the performer’s body resulting from muscle contraction and translates them to computer-synthesized sound. Our goal was to build upon the history of research in the field to develop a complete, integrated system that could be used by nonspecialist musicians. We describe the Embodied AudioVisual Interaction Electromyogram, an end-to-end system spanning wearable sensing on the musician’s body, custom microcontroller-based biosignal acquisition hardware, machine learning–based gesture-to-sound mapping middleware, and software-based granular synthesis sound output. A novel hardware design digitizes the electromyogram signals from the muscle with minimal analog preprocessing and treats it in an audio signal-processing chain as a class-compliant audio and wireless MIDI interface. The mapping layer implements an interactive machine learning workflow in a reinforcement learning configuration and can map gesture features to auditory metadata in a multidimensional information space. The system adapts existing machine learning and synthesis modules to work with the hardware, resulting in an integrated, end-to-end system. We explore its potential as a digital musical instrument through a series of public presentations and concert performances by a range of musical practitioners.

@article{Electromyogram, author = {Tanaka, Atau and Visi, Federico and Donato, Balandino Di and Klang, Martin and Zbyszyński, Michael}, title = {An End-to-End Musical Instrument System That Translates Electromyogram Biosignals to Synthesized Sound}, journal = {Computer Music Journal}, volume = {47}, number = {1}, pages = {64-84}, year = {2023}, month = jun, issn = {0148-9267}, doi = {10.1162/comj_a_00672}, url = {https://doi.org/10.1162/comj\_a\_00672}, eprint = {https://direct.mit.edu/comj/article-pdf/47/1/64/2386039/comj\_a\_00672.pdf}, }

2020

- Smart Hard Hat: Exploring Shape Changing Hearing ProtectionKwame Dogbe, Henry Glyde, Tim Nguyen, Themis Papathemistocleous, Katie Marquand, and Peter BennettIn Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, Jun 2020

In this paper we present the Smart Hard Hat; an interactive hard hat that aims to protect the hearing health of construction workers by utilising shape shifting technology. The device responds to loud noises by automatically closing earmuffs around the wearer’s ears, warning them of the damage that is being caused while taking away the need to consciously protect yourself. Construction workers are particularly vulnerable to noise-induced hearing loss, so this was the target user for this design. Initial testing revealed that the Smart Hard Hat effectively blocks out noise, that there is a possibility to expand the design to new user groups, and that there is potential in using shape-changing technologies to protect personal health.

@inproceedings{SmartHardHat, author = {Dogbe, Kwame and Glyde, Henry and Nguyen, Tim and Papathemistocleous, Themis and Marquand, Katie and Bennett, Peter}, title = {Smart Hard Hat: Exploring Shape Changing Hearing Protection}, year = {2020}, isbn = {9781450368193}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3334480.3383063}, doi = {10.1145/3334480.3383063}, booktitle = {Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems}, pages = {1–6}, numpages = {6}, keywords = {noise-induced hearing loss, shape-changing technology, smart hard hat}, location = {Honolulu, HI, USA}, series = {CHI EA '20}, } - Disruptabottle: Encouraging Hydration with an Overflowing BottleAdam Beddoe, Romana Burgess, Lucian Carp, Jessica Foster, Adam Fox, Leechay Moran, Peter Bennett , and Daniel BennettIn Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, Jun 2020

We present a prototype for a targeted behavioural intervention, Disruptabottle, which explores what happens when a ’nudge’ technology becomes a ’shove’ technology. If you do not drink water at a fast enough rate, the bottle will overflow and spill. This reminds the user that they haven’t drunk enough, aggressively nudging them to drink in order to prevent further spillage. This persuasive technology attempts to motivate conscious decision making by drawing attention to the user’s drinking habits. Furthermore, we evaluated the emotions and opinions of potential users towards Disruptabottle, finding that participants generally received the device positively; with 59% reporting that they would use the device and 92% believing it to be an effective way of encouraging healthy drinking habits.

@inproceedings{Disruptabottle, author = {Beddoe, Adam and Burgess, Romana and Carp, Lucian and Foster, Jessica and Fox, Adam and Moran, Leechay and Bennett, Peter and Bennett, Daniel}, title = {Disruptabottle: Encouraging Hydration with an Overflowing Bottle}, year = {2020}, isbn = {9781450368193}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3334480.3382959}, doi = {10.1145/3334480.3382959}, booktitle = {Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems}, pages = {1–7}, numpages = {7}, keywords = {aversive technology, behaviour change, hydration habits, nudge theory, shove theory}, location = {Honolulu, HI, USA}, series = {CHI EA '20}, }

2019

- High-Tech and Tactile: Cognitive Enrichment for Zoo-Housed GorillasFay E. Clark, Stuart I. Gray, Peter Bennett, Lucy J. Mason, and Katy V. BurgessFrontiers in Psychology, Jun 2019

The field of environmental enrichment for zoo animals, particularly great apes, has been revived by technological advancements such as touchscreen interfaces and motion sensors. However, direct animal-computer interaction (ACI) is impractical or undesirable for many zoos. We developed a modular cuboid puzzle maze for the troop of six Western lowland gorillas (

Gorilla gorilla gorilla ) at Bristol Zoo Gardens, United Kingdom. The gorillas could use their fingers or tools to interact with interconnected modules and remove food rewards. Twelve modules could be interchanged within the frame to create novel iterations with every trial. We took a screen-free approach to enrichment: substituting ACI for tactile, physically complex device components, in addition to hidden automatic sensors, and cameras to log device use. The current study evaluated the gorillas’ behavioral responses to the device, and evaluated it as a form of “cognitive enrichment.” Five out of six gorillas used the device, during monthly trials of 1 h duration, over a 6 month period. All users were female including two infants, and there were significant individual differences in duration of device use. The successful extraction of food rewards was only performed by the three tool-using gorillas. Device use did not diminish over time, and gorillas took turns to use the device alone or as one mother-infant dyad. Our results suggest that the device was a form of cognitive enrichment for the study troop because it allowed gorillas to solve novel challenges, and device use was not associated with behavioral indicators of stress or frustration. However, device exposure had no significant effects on gorilla activity budgets. The device has the potential to be a sustainable enrichment method in the long-term, tailored to individual gorilla skill levels and motivations. Our study represents a technological advancement for gorilla enrichment, an area which had been particularly overlooked until now. We wholly encourage the continued development of this physical maze system for other great apes under human care, with or without computer logging technology.@article{HighTechAndTactile, author = {Clark, Fay E. and Gray, Stuart I. and Bennett, Peter and Mason, Lucy J. and Burgess, Katy V.}, title = {High-Tech and Tactile: Cognitive Enrichment for Zoo-Housed Gorillas}, journal = {Frontiers in Psychology}, volume = {10}, year = {2019}, url = {https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2019.01574}, doi = {10.3389/fpsyg.2019.01574}, issn = {1664-1078}, }

2018

- NewsThings: Exploring Interdisciplinary IoT News Media Opportunities via User-Centred DesignJohn Mills, Mark Lochrie, Tom Metcalfe, and Peter BennettIn Proceedings of the Twelfth International Conference on Tangible, Embedded, and Embodied Interaction, Stockholm, Sweden, Jun 2018

Utilising a multidisciplinary and user-centred product and service design approach, ’NewsThings’ explores the potential for domestic and professional internet of things (IoT) objects to convey journalism, media and information. In placing news audiences and industry at the centre of the prototyping process, the project’s web connected objects explore how user requirements may be best met in a perceived post-digital environment. Following a research-through-design methodology and utilising a range of tools - such as workshops, cultural probes, market research and long-term prototype deployment with public and industry, NewsThings aims to generate design insights and prototypes that could position the news media as active participants in the development of IoT products, processes and interactions. This work-in-progress paper outlines the project’s approach, methods, initial findings - up to and including the pre-deployment phase - and focuses on novel insights around user-engagement with news, and the multidisciplinary team’s responses to them.

@inproceedings{NewsThings, author = {Mills, John and Lochrie, Mark and Metcalfe, Tom and Bennett, Peter}, title = {NewsThings: Exploring Interdisciplinary IoT News Media Opportunities via User-Centred Design}, year = {2018}, isbn = {9781450355681}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3173225.3173267}, doi = {10.1145/3173225.3173267}, booktitle = {Proceedings of the Twelfth International Conference on Tangible, Embedded, and Embodied Interaction}, pages = {49–56}, numpages = {8}, keywords = {design, insights, interaction, iot, journalism, media, news, objects, personalization, physical, prototype, rtd}, location = {Stockholm, Sweden}, series = {TEI '18}, } - CuffLink: A Wristband to Grab and Release Data Between DevicesAlex Church, Ethan Kenwrick, Yun Park, Luke Hudlass-Galley, Anmol Krishan Sachdeva, Zhiyu Yang, Jess McIntosh, and Peter BennettIn Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal QC, Canada, Jun 2018

We present CuffLink, a wristband designed to let users transfer files between devices intuitively using grab and release hand gestures. We propose to use ultrasonic transceivers to enable device selection through pointing and employ force-sensitive resistors (FSRs) to detect simple hand gestures. Our prototype demonstration of CuffLink shows that the system can successfully transfer files between two computers using gestures. Preliminary testing with users shows that 83% claim they would use a fully working device over typical sharing methods such as Dropbox and Google Drive. Apart from file sharing, we intend to make CuffLink a re-programmable wearable in future.

@inproceedings{CuffLink, author = {Church, Alex and Kenwrick, Ethan and Park, Yun and Hudlass-Galley, Luke and Krishan Sachdeva, Anmol and Yang, Zhiyu and McIntosh, Jess and Bennett, Peter}, title = {CuffLink: A Wristband to Grab and Release Data Between Devices}, year = {2018}, isbn = {9781450356213}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3170427.3188494}, doi = {10.1145/3170427.3188494}, booktitle = {Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems}, pages = {1–6}, numpages = {6}, keywords = {wearable interface, ultrasonic transceivers, gesture recognition, force-sensitive resistors, cross-device files sharing}, location = {Montreal QC, Canada}, series = {CHI EA '18}, } - Choptop: An Interactive Chopping BoardTuana Celik, Orsolya Lukács-Kisbandi, Simon Partridge, Ross Gardiner, Gavin Parker, and Peter BennettIn Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal QC, Canada, Jun 2018

Choptop is an interactive chopping board that provides simple, relevant recipe guidance and convenient weighing and timing tools for inexperienced cooks. This assistance is particularly useful to individuals such as students who often have limited time to learn how to cook and are therefore drawn towards overpriced and unhealthy alternatives. Step-by-step instructions appear on Choptop’s display, eliminating the need for easily-damaged recipe books and mobile devices. Users navigate Choptop by pressing the chopping surface, which is instrumented with load sensors. Informal testing shows that Choptop may significantly improve the ease and accuracy of following recipes over traditional methods. Users also reported increased enjoyment while following complex recipes.

@inproceedings{Choptop, author = {Celik, Tuana and Luk\'{a}cs-Kisbandi, Orsolya and Partridge, Simon and Gardiner, Ross and Parker, Gavin and Bennett, Peter}, title = {Choptop: An Interactive Chopping Board}, year = {2018}, isbn = {9781450356213}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3170427.3188486}, doi = {10.1145/3170427.3188486}, booktitle = {Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems}, pages = {1–6}, numpages = {6}, keywords = {smart kitchen, input devices, food preparation, consumer health}, location = {Montreal QC, Canada}, series = {CHI EA '18}, }

2017

- EchoSnap and PlayableAle: Exploring Audible Resonant InteractionPeter Bennett, Christopher Haworth, Gascia Ouzounian, and James WhealeIn Proceedings of the Eleventh International Conference on Tangible, Embedded, and Embodied Interaction, Yokohama, Japan, Jun 2017

This paper presents two projects that use the audible resonance of hollow objects as the basis of novel tangible interaction. EchoSnap explores how an audio feedback loop created between a mobile device’s microphone and speaker can be used to playfully explore and probe the resonant characteristics of hollow objects, using the resulting sounds to control a mobile device. With PlayableAle, the tone made by blowing across the top of a bottle is used as a method of controlling a game. The work presented in this paper builds upon our previous work exploring the concept of resonant bits, where digital information is given resonant properties that can be explored through physical interaction. In particular, this paper expands upon the concept by looking at resonance in the physical as opposed to digital domain. Using EchoSnap and PlayableAle to illustrate we present a preliminary design space for structuring the continuing development of audible resonant interaction.

@inproceedings{EchoSnapAndPlayableAle, author = {Bennett, Peter and Haworth, Christopher and Ouzounian, Gascia and Wheale, James}, title = {EchoSnap and PlayableAle: Exploring Audible Resonant Interaction}, year = {2017}, isbn = {9781450346764}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3024969.3025091}, doi = {10.1145/3024969.3025091}, booktitle = {Proceedings of the Eleventh International Conference on Tangible, Embedded, and Embodied Interaction}, pages = {543–549}, numpages = {7}, keywords = {mobile interaction, resonant bits, tangible user interface}, location = {Yokohama, Japan}, series = {TEI '17}, }

2016

- Tangible Interfaces for Interactive Evolutionary ComputationThomas Mitchell, Peter Bennett, Sebastian Madgwick, Edward Davies, and Philip TewIn Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems, San Jose, California, USA, Jun 2016

Interactive evolutionary computation (IEC) is a powerful human-machine optimisation procedure for evolving solutions to complex design problems. In this paper we introduce the novel concept of Tangible Interactive Evolutionary Computation (TIEC), leveraging the benefits of tangible user interfaces to enhance the IEC process and experience to alleviate user fatigue. An example TIEC system is presented and used to evolve biomorph images, with a recreation of the canonical IEC application: The Blind Watchmaker program. An expanded version of the system is also used to design visual states for an atomic visualisation platform called danceroom Spectroscopy, that allows participants to explore quantum phenomena through movement and dance. Initial findings from an informal observational test are presented along with the results from a pilot study to evaluate the potential for TIEC.

@inproceedings{TanglibleInterfacesForIEC, author = {Mitchell, Thomas and Bennett, Peter and Madgwick, Sebastian and Davies, Edward and Tew, Philip}, title = {Tangible Interfaces for Interactive Evolutionary Computation}, year = {2016}, isbn = {9781450340823}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/2851581.2892405}, doi = {10.1145/2851581.2892405}, booktitle = {Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems}, pages = {2609–2616}, numpages = {8}, keywords = {aesthetic evolution, interactive evolutionary computation, tangible user interface}, location = {San Jose, California, USA}, series = {CHI EA '16}, } - Tangibles for Health WorkshopAudrey Girouard, David McGookin, Peter Bennett, Orit Shaer, Katie A. Siek, and Marilyn LennonIn Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems, San Jose, California, USA, Jun 2016

eHealth research employing technology and HCI to support wellbeing, recovery and maintenance of conditions, has seen significant progress in recent years. However, such research has primarily focused on mobile "apps" running on commercial smartphones. We believe that Tangible User Interfaces (TUIs) offer many physical and interaction qualities that would benefit the eHealth community. Yet, there is little research that combines the two. Tangibles for Health will bring together leading researchers in tangible user interaction and health to explore the potential of tangibles as applied to healthcare and wellbeing.

@inproceedings{TangiblesForHealth, author = {Girouard, Audrey and McGookin, David and Bennett, Peter and Shaer, Orit and Siek, Katie A. and Lennon, Marilyn}, title = {Tangibles for Health Workshop}, year = {2016}, isbn = {9781450340823}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/2851581.2856469}, doi = {10.1145/2851581.2856469}, booktitle = {Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems}, pages = {3461–3468}, numpages = {8}, keywords = {wellbeing, tangible user interfaces, healthcare, e-health, accessibility, HCI}, location = {San Jose, California, USA}, series = {CHI EA '16}, }

2015

- Resonant Bits: Controlling Digital Musical Instruments with Resonance and the Ideomotor EffectPeter Bennett, Jarrod Knibbe, Florent Berthaut, and Kirsten CaterIn Proceedings of the International Conference on New Interfaces for Musical Expression, Baton Rouge, Louisiana, USA, Jun 2015

Resonant Bits proposes giving digital information resonant dynamic properties, requiring skill and concerted effort for interaction. This paper applies resonant interaction to musical control, exploring musical instruments that are controlled through both purposeful and subconscious resonance. We detail three exploratory prototypes, the first two illustrating the use of resonant gestures and the third focusing on the detection and use of the ideomotor (subconscious micro-movement) effect.

@inproceedings{ResonantBits1, author = {Bennett, Peter and Knibbe, Jarrod and Berthaut, Florent and Cater, Kirsten}, title = {Resonant Bits: Controlling Digital Musical Instruments with Resonance and the Ideomotor Effect}, year = {2015}, isbn = {9780692495476}, publisher = {The School of Music and the Center for Computation and Technology (CCT), Louisiana State University}, address = {Baton Rouge, Louisiana, USA}, booktitle = {Proceedings of the International Conference on New Interfaces for Musical Expression}, pages = {176–177}, numpages = {2}, location = {Baton Rouge, Louisiana, USA}, series = {NIME 2015}, } - TopoTiles: Storytelling in Care Homes with Topographic TangiblesPeter Bennett, Heidi Hinder, Seana Kozar, Christopher Bowdler, Elaine Massung, Tim Cole, Helen Manchester, and Kirsten CaterIn Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems, Seoul, Republic of Korea, Jun 2015

In this paper we present our initial ethnographic work from developing TopoTiles, Tangible User Interfaces designed to aid storytelling, reminiscence and community building in care homes. Our fieldwork has raised a number of questions which we discuss in this paper including: How can landscape tangibles be used as proxy objects, standing in for landscape and objects unavailable to the storyteller? How can tangible interfaces be used in an indirect or peripheral manner to aid storytelling? Can miniature landscapes aid recollection and story telling through embodied interaction? Are ambiguous depictions conducive to storytelling? Can topographic tangibles encourage inclusivity in group sharing situations? In this paper we share our initial findings to these questions and show how they will inform further TopoTiles design work.

@inproceedings{TopoTiles, author = {Bennett, Peter and Hinder, Heidi and Kozar, Seana and Bowdler, Christopher and Massung, Elaine and Cole, Tim and Manchester, Helen and Cater, Kirsten}, title = {TopoTiles: Storytelling in Care Homes with Topographic Tangibles}, year = {2015}, isbn = {9781450331463}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/2702613.2732918}, doi = {10.1145/2702613.2732918}, booktitle = {Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems}, pages = {911–916}, numpages = {6}, keywords = {tangible user interface, storytelling, reminiscence}, location = {Seoul, Republic of Korea}, series = {CHI EA '15}, } - FugaciousFilm: Exploring Attentive Interaction with Ephemeral MaterialHyosun Kwon, Shashank Jaiswal, Steve Benford, Sue Ann Seah, Peter Bennett, Boriana Koleva, and Holger SchnädelbachIn Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, Jun 2015

This paper introduces FugaciousFilm, a soap film based touch display, as a platform for Attentive Interaction that encourages the user to be highly focused throughout the use of the interface. Previous work on ephemeral user interfaces has primarily focused on the development of ambient and peripheral displays. In contrast, FugaciousFilm is an ephemeral display that aims to promote highly attentive interaction. We present the iterative process of developing this interface, spanning technical explorations, prototyping and a user study. We report lessons learnt when designing the interface; ranging from the soap film mixture to the impact of frames and apertures. We then describe developing the touch, push, pull and pop interactions. Our user study shows how FugaciousFilm led to focused and attentive interactions during a tournament of enhanced Tic-Tac-Toe. We then finish by discussing how the principles of vulnerability and delicacy can motivate the design of attentive ephemeral interfaces.

@inproceedings{FugaciousFilm, author = {Kwon, Hyosun and Jaiswal, Shashank and Benford, Steve and Seah, Sue Ann and Bennett, Peter and Koleva, Boriana and Schn\"{a}delbach, Holger}, title = {FugaciousFilm: Exploring Attentive Interaction with Ephemeral Material}, year = {2015}, isbn = {9781450331456}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/2702123.2702206}, doi = {10.1145/2702123.2702206}, booktitle = {Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems}, pages = {1285–1294}, numpages = {10}, keywords = {tangible interaction, soap film, non-ambient interaction, ephemeral user interfaces, attentive interaction}, location = {Seoul, Republic of Korea}, series = {CHI '15}, } - Resonant Bits: Harmonic Interaction with Virtual PendulumsPeter Bennett, Stuart Nolan, Ved Uttamchandani, Michael Pages, Kirsten Cater, and Mike FraserIn Proceedings of the Ninth International Conference on Tangible, Embedded, and Embodied Interaction, Stanford, California, USA, Jun 2015

This paper presents the concept of Resonant Bits, an interaction technique for encouraging engaging, slow and skilful interaction with tangible, mobile and ubiquitous devices. The technique is based on the resonant excitation of harmonic oscillators and allows the exploration of a number of novel types of tangible interaction including: ideomotor control, where subliminal micro-movements accumulate over time to produce a visible outcome; indirect tangible interaction, where a number of devices can be controlled simultaneously through an intermediary object such as a table; and slow interaction, with meditative and repetitive gestures being used for control. The Resonant Bits concept is tested as an interaction method in a study where participants resonate with virtual pendulums on a mobile device. The Harmonic Tuner, a resonance-based music player, is presented as a simple example of using resonant bits. Overall, our ambition in proposing the Resonant Bits concept is to promote skilful, engaging and ultimately rewarding forms of interaction with tangible devices that takes time and patience to learn and master.

@inproceedings{ResonantBits2, author = {Bennett, Peter and Nolan, Stuart and Uttamchandani, Ved and Pages, Michael and Cater, Kirsten and Fraser, Mike}, title = {Resonant Bits: Harmonic Interaction with Virtual Pendulums}, year = {2015}, isbn = {9781450333054}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/2677199.2680569}, doi = {10.1145/2677199.2680569}, booktitle = {Proceedings of the Ninth International Conference on Tangible, Embedded, and Embodied Interaction}, pages = {49–52}, numpages = {4}, keywords = {tangible user interface, slow technology, resonance, ideomotor control, human-computer interaction}, location = {Stanford, California, USA}, series = {TEI '15}, }

2014

- SensaBubble: a chrono-sensory mid-air display of sight and smellSue Ann Seah, Diego Martinez Plasencia, Peter D. Bennett, Abhijit Karnik, Vlad Stefan Otrocol, Jarrod Knibbe, Andy Cockburn, and Sriram SubramanianIn Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Toronto, Ontario, Canada, Jun 2014

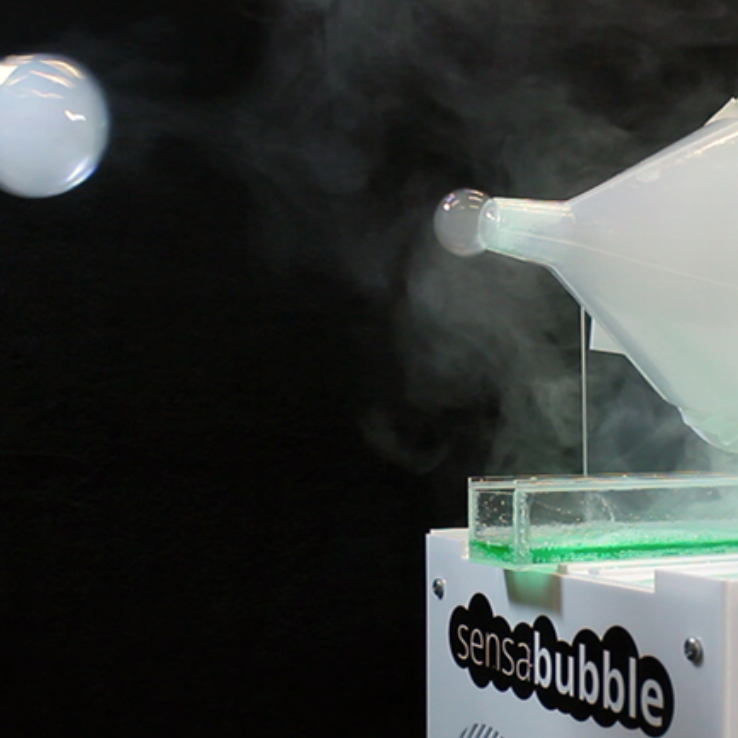

We present SensaBubble, a chrono-sensory mid-air display system that generates scented bubbles to deliver information to the user via a number of sensory modalities. The system reliably produces single bubbles of specific sizes along a directed path. Each bubble produced by SensaBubble is filled with fog containing a scent relevant to the notification. The chrono-sensory aspect of SensaBubble means that information is presented both temporally and multimodally. Temporal information is enabled through two forms of persistence: firstly, a visual display projected onto the bubble which only endures until it bursts; secondly, a scent released upon the bursting of the bubble slowly disperses and leaves a longer-lasting perceptible trace of the event. We report details of SensaBubble’s design and implementation, as well as results of technical and user evaluations. We then discuss and demonstrate how SensaBubble can be adapted for use in a wide range of application contexts – from an ambient peripheral display for persistent alerts, to an engaging display for gaming or education.

@inproceedings{SensaBubble, author = {Seah, Sue Ann and Martinez Plasencia, Diego and Bennett, Peter D. and Karnik, Abhijit and Otrocol, Vlad Stefan and Knibbe, Jarrod and Cockburn, Andy and Subramanian, Sriram}, title = {SensaBubble: a chrono-sensory mid-air display of sight and smell}, year = {2014}, isbn = {9781450324731}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/2556288.2557087}, doi = {10.1145/2556288.2557087}, booktitle = {Proceedings of the SIGCHI Conference on Human Factors in Computing Systems}, pages = {2863–2872}, numpages = {10}, keywords = {multimodality., interactive displays, ephemeral interfaces, bubbles, ambient displays}, location = {Toronto, Ontario, Canada}, series = {CHI '14}, } - Quick and dirty: streamlined 3D scanning in archaeologyJarrod Knibbe, Kenton P. O’Hara, Angeliki Chrysanthi, Mark T. Marshall, Peter D. Bennett, Graeme Earl, Shahram Izadi, and Mike FraserIn Proceedings of the 17th ACM Conference on Computer Supported Cooperative Work & Social Computing, Baltimore, Maryland, USA, Jun 2014

Capturing data is a key part of archaeological practice, whether for preserving records or to aid interpretation. But the technologies used are complex and expensive, resulting in time-consuming processes associated with their use. These processes force a separation between ongoing interpretive work and capture. Through two field studies we elicit more detail as to what is important about this interpretive work and what might be gained through a closer integration of capture technology with these practices. Drawing on these insights, we go on to present a novel, portable, wireless 3D modeling system that emphasizes "quick and dirty" capture. We discuss its design rational in relation to our field observations and evaluate this rationale further by giving the system to archaeological experts to explore in a variety of settings. While our device compromises on the resolution of traditional 3D scanners, its support of interpretation through emphasis on real-time capture, review and manipulability suggests it could be a valuable tool for the future of archaeology.

@inproceedings{QuickAndDirty, author = {Knibbe, Jarrod and O'Hara, Kenton P. and Chrysanthi, Angeliki and Marshall, Mark T. and Bennett, Peter D. and Earl, Graeme and Izadi, Shahram and Fraser, Mike}, title = {Quick and dirty: streamlined 3D scanning in archaeology}, year = {2014}, isbn = {9781450325400}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/2531602.2531669}, doi = {10.1145/2531602.2531669}, booktitle = {Proceedings of the 17th ACM Conference on Computer Supported Cooperative Work \& Social Computing}, pages = {1366–1376}, numpages = {11}, keywords = {interpretation, archaeology, 3d scanning}, location = {Baltimore, Maryland, USA}, series = {CSCW '14}, }

2013

- Haptic cues: texture as a guide for non-visual tangible interactionKatrin Wolf, and Peter D. BennettIn CHI ’13 Extended Abstracts on Human Factors in Computing Systems, Paris, France, Jun 2013

Tangible User Interfaces (TUIs) represent digital information via a number of sensory modalities including the haptic, visual and auditory senses. We suggest that interaction with tangible interfaces is commonly governed primarily through visual cues, despite the emphasis on tangible representation. We do not doubt that visual feedback offers rich interaction guidance, but argue that emphasis on haptic and auditory feedback could support or substitute vision in situations of visual distraction or impairment. We have developed a series of simple TUIs that allows for the haptic and auditory exploration of visually hidden textures. Our technique is to transmit the force feedback of the texture to the user via the attraction of a ball bearing to a magnet that the user manipulates. This allows the detail of the texture to be presented to the user while visually presenting an entirely flat surface. The use of both opaque and transparent materials allows for controlling the texture visibility for comparative purposes. The resulting Feelable User Interface (FUI), shown in Fig. 1, allows for the exploration of which textures and structures are useful for haptic guidance. The findings of our haptic exploration shall provide basic understanding about the usage of haptic cues for interacting with tangible objects that are visually hidden or are in the user’s visual periphery.

@inproceedings{Hapticcues, author = {Wolf, Katrin and Bennett, Peter D.}, title = {Haptic cues: texture as a guide for non-visual tangible interaction}, year = {2013}, isbn = {9781450319522}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/2468356.2468642}, doi = {10.1145/2468356.2468642}, booktitle = {CHI '13 Extended Abstracts on Human Factors in Computing Systems}, pages = {1599–1604}, numpages = {6}, keywords = {texture, tangible user interface, haptic, guidance}, location = {Paris, France}, series = {CHI EA '13}, }

2012

- ChronoTape: tangible timelines for family historyPeter Bennett, Mike Fraser, and Madeline BalaamIn Proceedings of the Sixth International Conference on Tangible, Embedded and Embodied Interaction, Kingston, Ontario, Canada, Jun 2012

An explosion in the availability of online records has led to surging interest in genealogy. In this paper we explore the present state of genealogical practice, with a particular focus on how the process of research is recorded and later accessed by other researchers. We then present our response, ChronoTape, a novel tangible interface for supporting family history research. The ChronoTape is an example of a temporal tangible interface, an interface designed to enable the tangible representation and control of time. We use the ChronoTape to interrogate the value relationships between physical and digital materials, personal and professional practices, and the ways that records are produced, maintained and ultimately inherited. In contrast to designs that support existing genealogical practice, ChronoTape captures and embeds traces of the researcher within the document of their own research, in three ways: (i) it ensures physical traces of digital research; (ii) it generates personal material around the use of impersonal genealogical data; (iii) it allows for graceful degradation of both its physical and digital components in order to deliberately accommodate the passage of information into the future.

@inproceedings{ChronoTape, author = {Bennett, Peter and Fraser, Mike and Balaam, Madeline}, title = {ChronoTape: tangible timelines for family history}, year = {2012}, isbn = {9781450311748}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/2148131.2148144}, doi = {10.1145/2148131.2148144}, booktitle = {Proceedings of the Sixth International Conference on Tangible, Embedded and Embodied Interaction}, pages = {49–56}, numpages = {8}, keywords = {temporal tangible user interfaces, personalisation, inheritance, genealogy}, location = {Kingston, Ontario, Canada}, series = {TEI '12}, }

2008

- The BeatBearing: a Tangible Rhythm SequencerPeter Bennett, and Sile O’ModhrainIn Electronic Proceedings of NordiCHI ’08: 5th Nordic Conference on Computer-Human Interaction, Jun 2008event-place: Lund, Sweden

The BeatBearing is a novel musical instrument that allows users to manipulate rhythmic patterns through the placement of ball bearings on a grid. The BeatBearing has been developed as an explorative design case for investigating how the theory of embodied interaction can inform the design of new digital musical instruments.

@inproceedings{bennett_beatbearing_2008, title = {The {BeatBearing}: a {Tangible} {Rhythm} {Sequencer}}, booktitle = {Electronic {Proceedings} of {NordiCHI} '08: 5th {Nordic} {Conference} on {Computer}-{Human} {Interaction}}, author = {Bennett, Peter and O'Modhrain, Sile}, year = {2008}, note = {event-place: Lund, Sweden}, }